Introduction to Rons Data Stream

Rons Data Stream was created to automate the cleaning of multiple data files (or folders). The tool is especially useful for users who need to process files repetitively on a regular basis because, once the settings have been put in place, they are saved and can be used again, which saves a lot of time. After that, it only takes a few steps before clicking on the "Process" button and updating the files whatever their size or number.

The application can also be used to convert files formats (Output Formaters settings) or to split files (Output Shapers settings). Multiple Source containers and formats, Cleaners and Output formats and locations can be configured. Everything will be processed in one go respecting the order of the tasks.

Two areas need to be set up to process files automatically: a Cleaner and a Job. The Cleaner represents the "What (happens)" and the Job the "To What".

The Job is the area where the source file(s), format and output destination are determined and selected.

The actual editing action(s) are defined in a Cleaner that is incorporated into the Job settings.

The Cleaner section offers a list (or a palette) of numerous data editing operations that can be selected and set up. Once the rules have been defined and organized, the Cleaner can be named and saved.

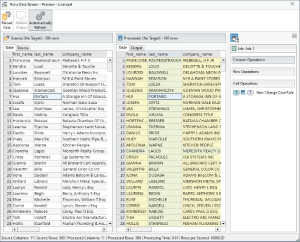

Finally, before running the editing process, the file(s) to be edited can be viewed in a Preview Screen that displays the grid with the data "Before" and "After" and the rules that are involved.

Further, we are going to give more detailed information concerning the settings of the Job section, then about the ones of the Cleaner section, and finally, we will explain how to preview and process the Job together with the Cleaner and finalize a task.

1. The Job

1. Concept of a Job

The aim of Rons Data Stream is to shave hours off our customers day by allowing one-click data processing, whilst drinking coffee. The Cleaners describe what happens to the data, so a way of describing which data to apply the Cleaner to was necessary. The Job does that.

2. Job Rules

Jobs contain a list of data sources, a list of Cleaners, and a list of outputs, which is all the information needed to process data with a button press. Multiple sources or outputs can be selected allowing the processing of multiple files at the same time.

Jobs can be saved in File Explorer and re-used later.

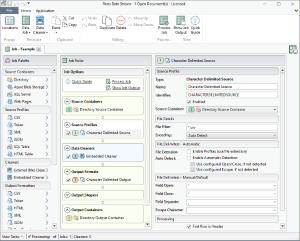

Clicking on New Job on the Start Page opens a new Job tab. There are two predominant areas: the Job Palette and the Job Rules. The middle section displays the details of the selected Job Rules. In the right corner, a button opens a Preview Side Panel (see 4. The Preview).

There are in total seven sections to be set up:

- Source Containers (Essential)

- Source Profiles (Essential)

- Data Cleaners (Optional)

- Output Formats (Essential)

- Output Shapers (Optional)

- Output Containers (Essential)

- Output Loggers (Optional)

However, five of them are essential sections that need to be set up with the necessary information in order to run the Job. More detailed information concerning each of these five sections is given below.

1. Source Containers

As described by the name, the Source Containers section contains the definition of entities that contain data for processing, in the form of files or data tables. The Palette contains these options for data containers:

- Directory

- Azure Blob Storage

- SQL Server

- Web Pages

Clicking on one of the Palette options adds a corresponding rule to the Job Rules section with a number. For example, when clicking on Directory, option '1 - Directory Source Container' is added as the first rule to the Job Rules section. Multiple source container may be added and the rules may be moved up, down, duplicated or deleted using the menu options in the menu on the top.

When clicking on the new rule, it opens the settings in the middle of the screen. From there, the directory containing the files can be browsed. When a folder is selected, the files appear in the Job Output area after refreshing.

2. Source Profiles

Now that we have the directory Source Container, we need to choose the format of the files to be processed. Source Profiles define how the data in the Source Container is filtered and/or parsed.

Typically, they contain a filter (like '*.csv') to select data sources in the container, and information about how to read them. For example CSV files need to have the type of delimiters to use in order to be read.

Multiple Profiles can use one Source Container.

The Source Profiles Palette displays a list of formats:

- CSV (or any character Delimited format)

- Token

- XML

- JSON

- SQL Table

- HTML Table

As an example, select CSV to add it to the Job Rules area. The corresponding rule is numbered and added to the Job Rule section as 1- Character Delimited Source and it opens its screen on the right where further configurations can be done (filters, delimiters, etc.).

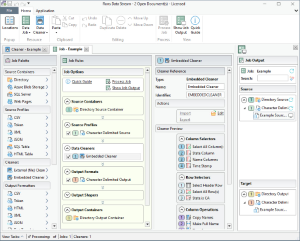

3. Data Cleaners

Editing action(s), are defined by Cleaners that are linked to the Job settings. Cleaners can be shared between multiple Jobs and can be saved in File Explorer and re-used later.

In the Job section, this is the place where one or more Cleaner(s) can be added for the Job to be run. The Cleaner configuration has its own section in this guide (See 2. Data Cleaner).

What is important to know at this stage is that either an External (File) Cleaners or an Embedded Cleaner(s) can be selected from the Palette.

External (File) Cleaners: Cleaners can be saved and shared between Jobs, so this is where existing Cleaners can be added. Simply browse the path in the middle section to select the existing Cleaner(s). After a Cleaner was selected, it is possible to make changes to it. Clicking on Edit in the middle section. It will open the Cleaner in another tab where changes can be made and saved.

Embedded Cleaner(s): Selecting it means that a new Cleaner must be created and this can be done by clicking on Edit in the middle section. It will open the Cleaner in a new tab where changes can be made and saved.

Important tip: If you would like to check if changes the Cleaner(s) are making to the data are as expected, go back to the Job tab and open the Preview side panel. Each file can be previewed to shows the outcome of the editing action(s). Make sure to refresh that section regularly.

4. Output Formats

The Output Formats section is used to establish the format in which the file(s) output after processing.

Note: Rons Data Stream can also be simply used as a File Converter.

The format set up Palette selection is:

- CSV

- Token

- HTML

- XML

- JSON

- Text Template

- SQL Table

- SQLite Database

Several Output formats may be selected. For each selected format, the relating configuration section requires to choose an Output Container. That way the different formats can be saved into their own folders.

At this point, if the Output Containers weren't selected yet from the Palette, then they wouldn't show in the drop down menu in the Output Format setting area. Select them first from their Palette, then go back and then they will be listed in the drop down menu and it will be possible to select them. The Job wouldn't be able to be processed without them.

Important: In all settings, whenever a new name (or an identifier) needs to be created, one is always created automatically as it's a compulsory field. However, note that it can be renamed.

5. Output Shapers

The Output Shapers section is optional. The set up of this section allows to split file(s) while they are processed. There are four different settings types (four different ways to split) that can be selected from the Palette:

- Split - Row Count

- Split - Row Ratio

- Split - Row Filter

- Split - Column Value

When the palette choice is selected, it is added to the rule and can be set up in the configuration screen.

6. Output Containers

Similar to Source Containers, Output Containers do exactly as the name implies: contain the outcome of file processing.

The Output Containers Section is the area used to determine where the processed files will be saved. In the Palette, three types of containers are listed:

- Directory

- Azure Blob Storage

- SQL Server

7. Output Loggers

When processing large data source it can be difficult to spot errors. Fortunately, processed files automatically generate a log file. Loggers (or log files) can be configured and associated with output formatters. Output Loggers require an Output Container, which can be separate from the data Output Container(s).

In the Output Loggers section, one can set up where the log file is to be saved by selecting the appropriate output container that has been created.

Because several Output Containers might have been created, then there might be different logfiles. Therefore, logfiles might need to be saved into different destinations. In this case, several logfiles can be selected from the Palette and then linked to each Output Containers that will be listed in the drop down menus. However, this is really a matter of preference and the logfile set up is optional.

2. The Cleaners

1. Concept of a Cleaner

Cleaners are the core of Rons Data Stream.

Now that we have the "Where and How" set up (The Job), we need the "What" (happens): the Cleaner.

A Cleaner defines the editing operations to be processed through the data files when running the Job. One or more cleaners may be saved and added to a Job setting in any order of execution.

After the Cleaners are created and saved, they can be integrated into the Data Cleaners Job rules section. If more than one Cleaner is integrated, the Job will process them in their visible order.

In order to start cleaning or amending files, a Cleaner needs be set up with cleaning rules.

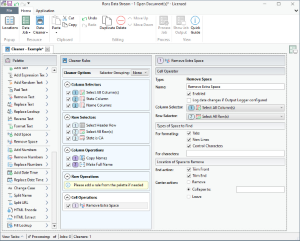

2. Cleaner Rules

The categories of rules are as follows:

1. Columns Selectors

Each Column Selector rule defines criterion to select one or more columns. Column Selectors are used throughout a Cleaner to determine which columns a rule operates on.

Select the Columns where the editing will apply. There are three different selectors to be chosen from the Palette:

- All Columns (Selected by default)

- Column Range

- Column List

This section is compulsory and must contain at list one rule.

2. Rows Selectors

Each Row Selector rule defines criterion to select one or more rows. Row Selectors are also used throughout a Cleaner to determine which rows a rule operates on.

Select the Rows where the editing will apply. There are five different Row Selectors that can be picked from the Palette:

- Header Row (Selected by default)

- All Rows (Selected by default)

- Row Range

- Empty/Filled Rows

- Filter

Header Row and All Rows are selected by default which means that the editing rules will be applied all over the data sheet including the Header Row. However, even if they are selected by default for convenience, the rules can easily be deleted or deactivated by removing the click from the box if needed.

This section is compulsory and must contain at list one rule.

3. Column Operations

Column operations define what actions are to be performed on the columns, and most require Column Selectors. For example, delete or merge columns.

Editing actions that are specific to columns can be set up in this area. There are seven Column Processors that can be picked from the Palette:

- Add Columns(s)

- Update Column

- Copy Column

- Move Column

- Delete Column

- Merge Column

- Split Column

4. Row Operations

Row operations define what actions are to be performed on the rows, and all require Row Selectors, and some Column Selectors. For example, delete or duplicate rows.

There are three operations specific to rows that can be set up in this area.

- Add Row

- Delete Row

- Split Row

5. Cell Operations

Cell operations define what actions are to be performed on each cell, and all require Row Selectors, and Column Selectors. For example, adding row number to a cell.

There are twenty-two Operations specific to cells can be set up in this area.

- Add text

- Add Expression Text

- Add Random Text

- Pad Text

- Remove Text

- Replace Text

- Replace Lookup

- Reverse Text

- Format Text

- Add Space

- Remove Space

- Add Numbers

- Remove Numbers

- replace Numbers

- Add Date Time

- Replace Date Time

- Change Case

- Split Name

- Split URL

- HTML Encode

- HTML Extract

- Fill Lookup

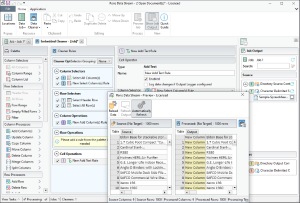

3. Preview and Finalizing the Job

1. Preview

After the Job has been fully configured, the output can be previewed to see the result of the rules before execution.

The preview button opens a side panel displaying the Source and Target/Output information. Under the Source section, a preview panel is available for each file showing the data before and after.

Under the Target section, the same preview panel is available, this time showing the files not only with outcome of the Cleaners but also, potentially, the data display with new format(s).

2. Processing the Job

The final steps happens in the Job:

When all the Jobs rules have been set up and include a Cleaner, the Job can be run by clicking on the Job Process button in the top menu or in the Job Options area of the Job Rules section.

Conclusion

Once Jobs and Cleaners have been set up, they can be combined and run anytime again. There’s no limit to the number of Jobs and Cleaners as they are saved in the File Explorer. This is the reason why Rons Data Stream is so powerful.

Setting up the Jobs and Cleaners take a little time at the beginning but it's so worth it: once they have been created, they can be re-used, re-combined in different ways and run again and again, in no time.

Related Information

CSV Editing

Rons Data Stream

Application

Examples